- Avicenna

- Posts

- How Google will run the world with AI

How Google will run the world with AI

Hey there! You might’ve noticed I’ve implemented some updates around here, and the No Longer a Nincompoop newsletter is… well, no more.

Now that I’m working with global companies and government entities, and my newsletter is like a resume, telling people to read my newsletter with the word “nincompoop” in it just isn’t hitting the same.

Avicenna is my consultancy, so the newsletter will be moved under that. There won’t be many changes to the newsletter itself, except I’m now getting help to write the newsletter so I can release it more consistently.

Here’s the tea 🍵

Gemini is king 👑

Google’s Agent Builder 🤖

Google is simulating fruit flies 🪰

Google’s own app builder ⚒️

My take on the best app builder 🤔

DolphinGemma - an LLM to predict dolphin sounds 🐬

Google is building the foundations of a generative media platform 🎥

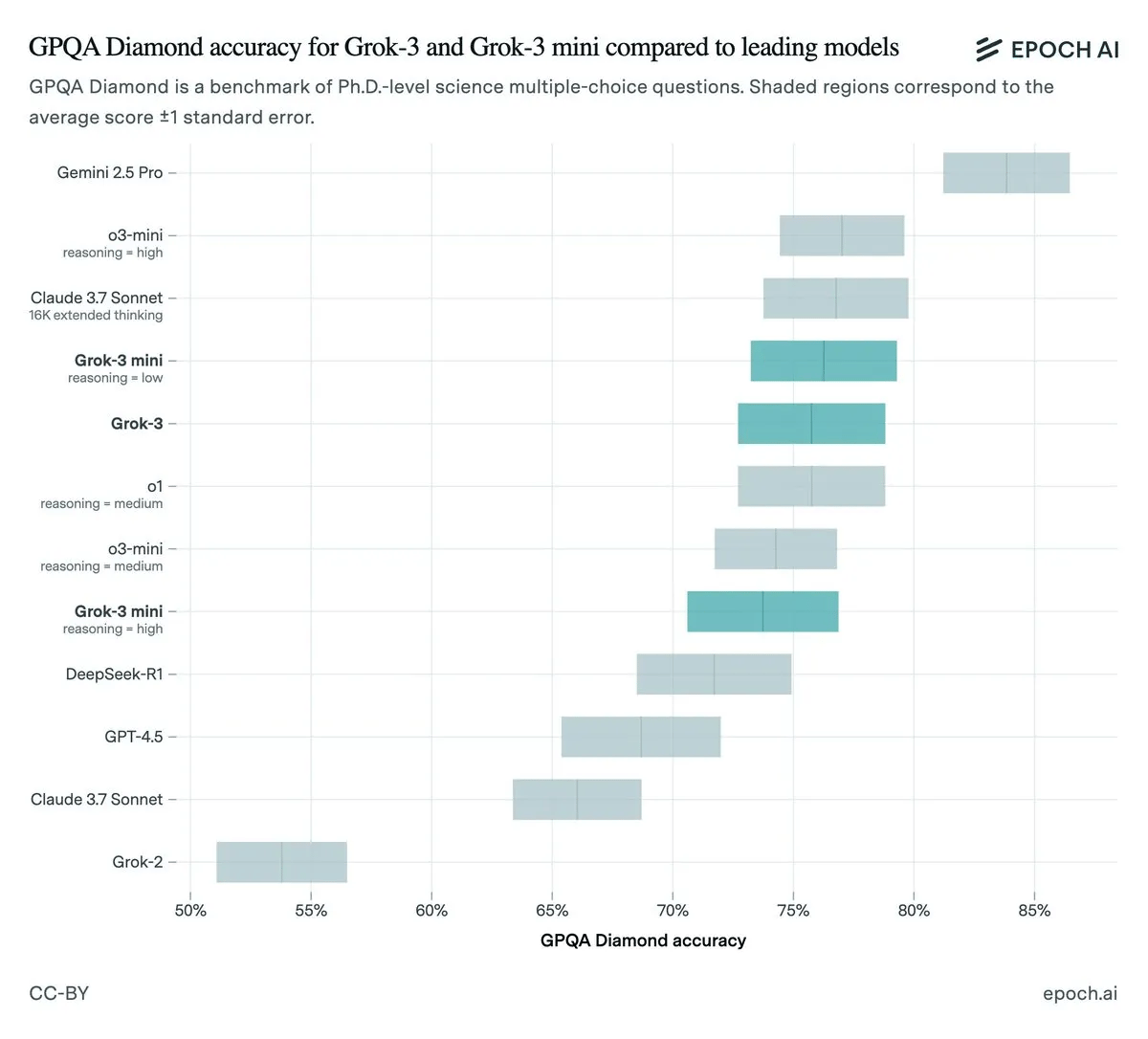

The best model on the planet

Google has the best AI model in the world. If was to recommend a single model to anyone, it would be Gemini 2.5 Pro. It is unbelievably good.

The GPQA benchmark is a set of 448 multiple choice questions carefully created by domain experts from biology, physics, and chemistry. The questions are designed be “Google-proof” – people who use the internet to help them and spend over 30 minutes per question are only able to score 34% on average.

Even experts, including PhD holders in the corresponding domains, achieve only 65% accuracy on this test.

Meanwhile, Gemini is at 85%+. The model is just better – look at the gap between Gemini and the rest.

This is the only model that I’ve used that challenges what I say, and provides alternative viewpoints and perspectives without being prompted to do so. Unlike the sycophantic ChatGPT, Gemini is more like an actual colleague.

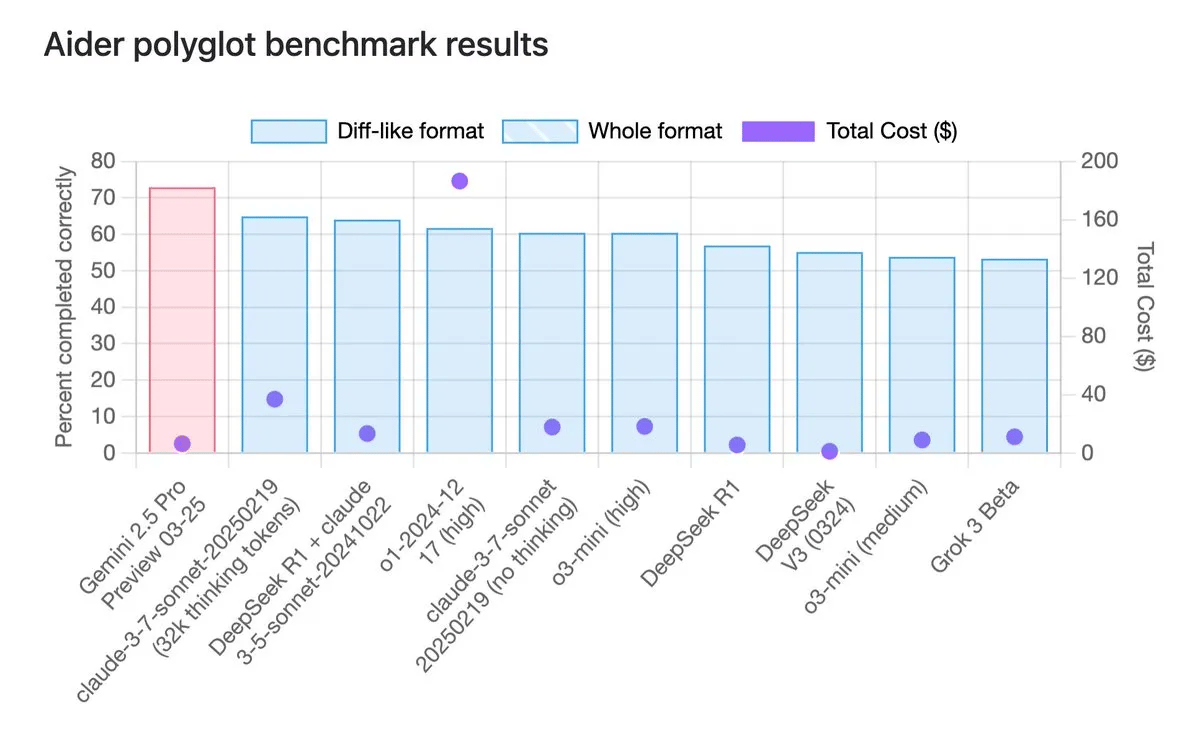

An even better look at how significant this model is is the Aider benchmark, which is a set of coding exercises.

The dots in the columns represent the cost of the model. Not only is Gemini the best-performing model, it’s also one of the cheapest models, alongside DeepSeek.

The crazy part is that you can use this model for free on the aistudio.google.com website right now. You can also use it in the Gemini app.

I would highly recommend trying this model out. If you don’t need to use it for coding, use it for research.

Go to the Gemini app and select ‘Deep Research with 2.5 Pro’. The model can look through hundreds of web pages and create comprehensive and accurate reports within minutes.

You might wonder, ‘how can Google manage to serve this model for free when others are clamouring for GPUs from NVIDIA and they can’t support demand?’

Well, Google doesn’t use NVIDIA chips. They have their own TPUs. This is another reason why Google is poised to dominate the market. They don’t depend on anyone. They have the best model, they have the talent, and they have their own chips which are very good.

How do I know they’re good?

Ilya Sutskever's Safe Super Intelligence (SSI) is using Google TPUs [Link]. Their only goal is super intelligence.

The entire ecosystem

Not only does Google have the best model, their own chips, talent, money, and distribution, but they’re also getting into the framework space.

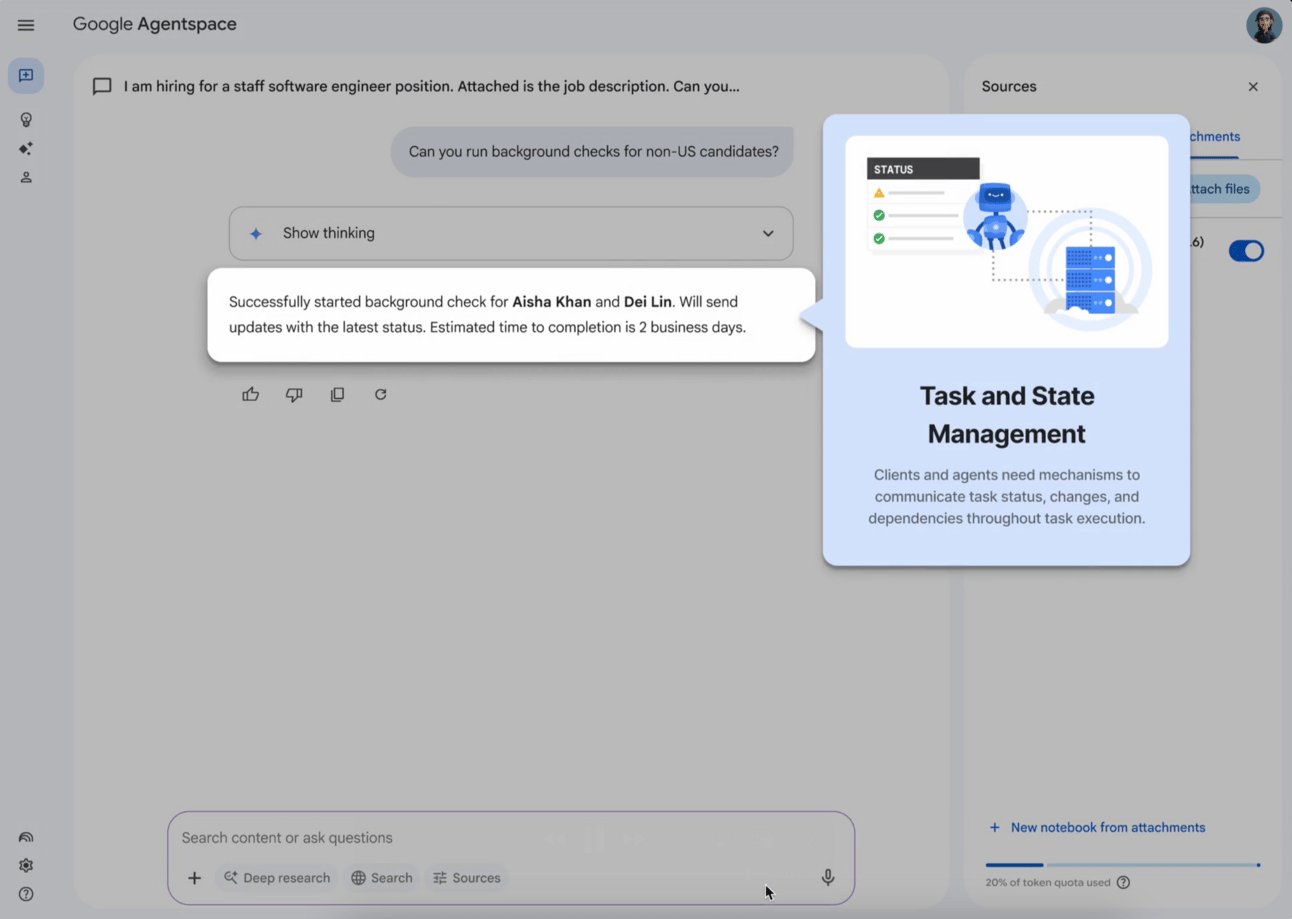

They recently launched Agent2Agent (A2A), an open source protocol to help agents communicate and work together to solve tasks. In the future, you won’t need to help your AI agent. Another AI agent will help your AI agent. That’s what this is.

It’s a mechanism to allow agents to talk and get help from each other, work across dozens of different applications, and even use different modalities like text, images, audio and video.

The agents can work on tasks across multiple days and provide updates in task management software like Jira.

This is the future of work. Millions of agents will be crawling the web, interacting with other agents, sending and retrieving info, and generating revenue.

Best get in early while you can.

Google has also released Agent Development Kit (ADK), a framework for building AI agents. It supports multi-agent systems, tool use, and MCP. They’ve also released Agent Garden, a a collection of ready-to-use samples and tools directly accessible within ADK. You can leverage pre-built agent patterns, components and connections with enterprise applications like HubSpot, Salesforce, and so on.

To actually push these agents out, they’ve also released Agent Engine, which allows anyone to deploy agents with any framework and the proper security controls.

Once again, Google is trying to own everything. The models, the platforms, the frameworks, all of it. It’s incredible to see this speed of development and iteration from such a large company, especially considering how they were doing just a year ago.

Google has built an AI model that simulates the behaviour of a fruit fly

In partnership with the Howard Hughes Medical Institute (HHMI), DeepMind has built an AI model that accurately simulates how fruit flies walk, fly, and behave.

They’ve used their own open source physics simulator MuJoCo, which is actually for robots and biomechanics, to develop the simulations.

Google has used the open source MuJoCo physics simulator, which is actually for robots and biomechanics, to develop their fruit fly.

This means it’s got some very complex capabilities, with intricacies that replicate real fruit flies, like precise exoskeleton modelling and wing movement dynamics. The simulation can even use its eyes to control its actions.

They’ve trained a neural network (NN) on real fly behaviour using recorded videos, then let it control the physics engine. It’s fascinating stuff.

But, what actually is the point?

Google believes that by doing this, they’ll better understand the connection between the body and brain in fruit flies (and more broadly for other species) and how behaviour can change based on environment. This is important for a number of reasons:

Neuroscience: Google’s fruit fly simulation could be used to better inform existing technologies like Neuralink, which is conducting research and human-based clinical trials to help quadriplegics control their computers through thought.

Robotics: If we can figure out how to accurately mimic behaviours in different environments, we can eventually replicate this for human behaviours and use this knowledge to train robots to simulate those behaviours.

AI-driven entertainment: AI-generated content like games, movies and cartoons is the future of the entertainment industry. Using prompts, people could imagine entire worlds and AI could realistically generate them on the fly. Projects like the fruit fly simulation, which will change our understanding of movement and behaviour, could be integral to enhancing the accuracy of models like these.

It’s all about building world models, which both DeepMind and NVIDIA have spoken about before. If we can create realistic simulations of behaviours that match real world behaviours, we can create an infinite amount of simulated worlds which can be used for training robots as well as entertainment. At the end of the day, it all boils down to physics.

Learn more here [Link].

Google has released Firebase Studio, a competitor for Lovable, Replit, and Cursor

It’s kind of crazy that Google is now shipping like a startup. To see how they’ve changed things around between the release of ChatGPT and now is actually very impressive, considering how badly they started out.

Google recently released Firebase Studio, an agentic, cloud-based development environment that lets users build and deploy full-stack AI apps. It’s been designed as an offshoot of Firebase, Google’s platform for database management, authentication, hosting, and more.

Think of an AI that has access to all the features of Google’s database, authentication, and pretty much everything else needed to build applications. This is a no-brainer for Google. After all, platforms like Replit have already found a lot of success doing this.

It makes complete sense for Google to get into this as well, considering they own all the infrastructure –their AI, their database, their authentication, their chips, their everything. Google is in the absolute best position to dominate the market of AI app builders.

Unfortunately, Firebase Studio isn’t that great right now. I wouldn’t recommend using it over Replit and others. But, this is a sign of what's to come. I can see Google eating a large chunk of the market.

You can dive even further into it here [Link].

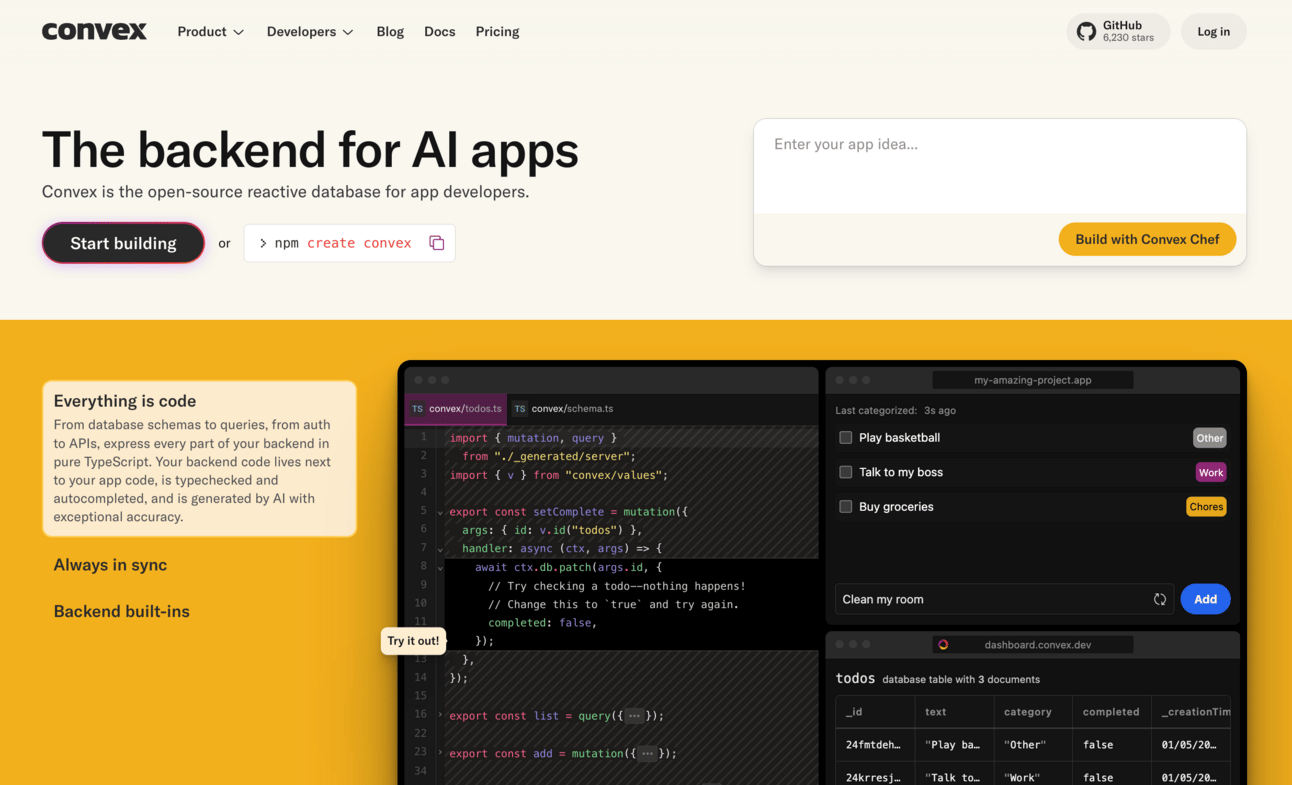

Convex Dev

I recently came across another application similar to Firebase Studio that I just have to share. Convex.dev is a backend for apps. They have their own implementation of a database, authentication, and so on.

What makes Convex so cool is that they’ve partnered with Bolt to release their own AI app builder that uses Convex on the backend. All I can say is that you must try it.

I’ve used every single AI app builder, but none of them has one-shotted authentication the way Convex has. Its ability to build a functional, working backend almost instantly is the best thing about it, and truly separates it from other applications like Replit and Firebase Studio. Other no-code builders like Lovable are good, but they don’t have their own backend systems and they require the user to do the work to connect to a database.

If you want to build a functional application quickly, I would recommend Convex.

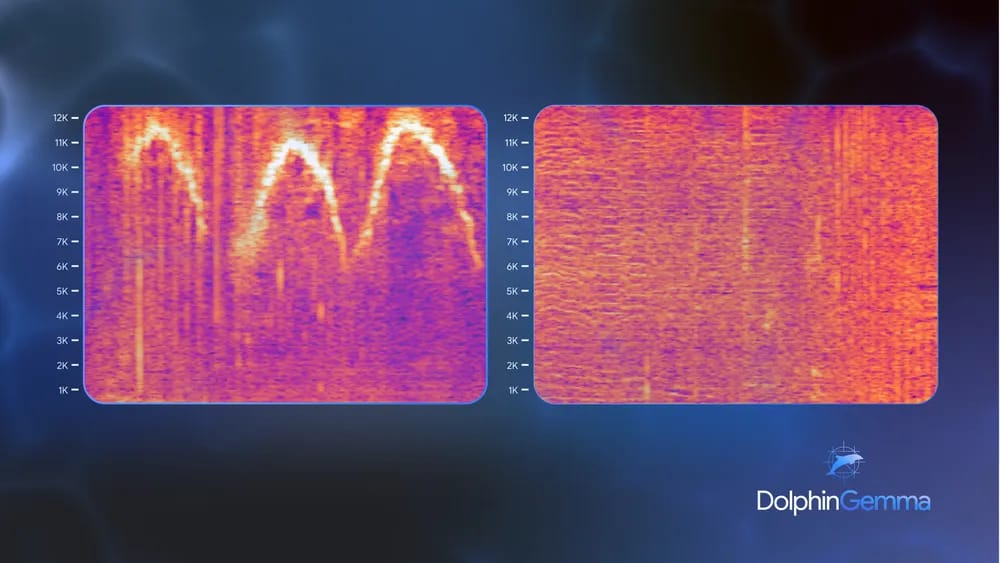

Google has announced DolphinGemma, an LLM to predict dolphin sounds

Could advancements in AI let us talk to dolphins one day?

That’s the question Google is working on with DolphinGemma, their new LLM that predicts dolphin sounds. Yes, it is as wild and futuristic as it says on the tin.

Google has partnered with Georgia Tech and the Wild Dolphin Project to build this LLM, which has been trained on over 40 years of dolphin sounds to ‘learn the structure of dolphin vocalisations and generate novel dolphin-like sound sequences’.

Simply put, they’re figuring out what dolphins are saying, and learning how to talk back. Similar to regular LLMs, they’re trying to predict dolphin sounds based on previously emitted sounds. The craziest part is that they’re doing this on Pixel phones.

DolphinGemma opens an incredibly interesting can of worms around interspecies communication. In Google’s version of the future, we could effectively talk to animals; not quite Dr. Doolittle style, but using technology to decode, analyse, and replicate sounds.

I’m not gonna lie, I don’t know how I feel about this… but I can recognise it’s a massive leap and will facilitate some serious breakthroughs in both technology and animal science, just like the fruit fly project. What scares me is that I feel like even if we do figure out how to communicate, I wonder if they will even engage with us. For some reason, I just don’t think they will.

Google plans to share DolphinGemma more broadly with the research community later this year. You can read more about it here [Link].

Google is building out its generative media capabilities with new additions Lyria 2 and VEO 2

Google is putting together the pieces to form its own generative media platform.

Slowly but surely, Google has been building out a killer toolbox of generative media products, including Chirp 3 for voice generation, Imagen 3 for text-to-image, VEO 2 for editing and camera control, and Lyria 2 for music.

When you combine each of these, you’ve got the capacity to develop practically anything. Movies, music, games; it’s all there.

Considering how good Google’s models have become, and the fact that Veo 2 is now the best video generation model on the planet, I can see Google completely owning this space. Veo 2 is also available via the API now as well.

You can read all about the updates and how to get started using these in Vertex AI here [Link].

If you are interested in other generative media platforms, I’d recommend trying out Flora. It has a very sleek UI and UX and lets you use all of the different models in a single place.

More you might’ve missed

Google CEO Sundar Pichai says that more than a third of the company’s code is generated using AI [Link]. Microsoft CEO Satya Nadella has said the same thing [Link]. Cursor is currently processing over a billion lines of code a day [Link].

Google is shining the spotlight on generative AI with a list of over 600 real-world use cases to explore from the likes of Mercedes-Benz, Deloitte, and Adobe [Link].

I just have to share this great thread on the history of transformer architecture. It’s a very solid little history lesson on the founding of attention mechanisms and the creation of the famous Attention is All You Need paper. Also shows how many different things have had to happen for us to be where we are now [Link].

This thread shows experiments people are doing with Gemini 2.5’s image gen [Link].

Chat SDK is a free, open-source template for building powerful chatbot applications. You can use different modes, and it also has a canvas feature and can display JSX inline – so it’s basically generative UI as well. A very good starting point for building apps [Link].

Gemini 2.5 pro support has been added to Claude Code [Link].

Notion has released an MCP server for its API [Link].

Reddit has integrated Gemini for Reddit Answers [Link]. I think this makes sense considering Gemini has the largest context window of the main models, and is also the best model available right now. There is massive alpha in letting Gemini look through old Reddit posts for deep research, especially considering Reddit has over a decade of user-rated data. This will be invaluable for AIs.

How was this edition? |

As always, thanks for reading – and if you’re loving these AI insights, please consider becoming a premium subscriber. It means I can keep delivering high-quality AI news to you all.

Written by a human named Nofil

Reply