- Avicenna

- Posts

- Meta's Dystopian Vision of the Future

Meta's Dystopian Vision of the Future

Welcome back to the Avicenna AI newsletter.

Here’s the tea 🍵

Meta’s making money moves 🤑

How talent shuffles in AI labs 🫂

Do NOT use Meta’s AI app ❌

Is dating an AI model cheating 😵💫

Are AI models actually useless long term 📉

Two Claude’s is better than one 🤖🤖

AI will split education in half ➗

Avicenna is my AI consultancy. I’ve been helping companies implement AI, doing things like reducing processes from 10+ mins to <10 seconds. I helped Claimo generate $40M+ in revenue by 10x’ing their team efficiency.

Enquire on the website or simply reply to this email.

P.S., the timeline page on our website is now working! Here you’ll find every single piece of AI-related news in one place. I’m working to backdate all the data and make it update in real-time. Stay tuned.

Meta’s spending billions

If you’ve been following Meta, you’d know that they have really dropped the ball recently. Llama 4’s release was less than impressive, and there simply isn’t much use for their models. They have been releasing a lot of research, but unfortunately, this simply isn’t translating to good models that people actually want to use.

So now, Meta has made their next big move.

Meta has basically bought Scale AI for $14.3 billion USD. Technically they’ve got a minority 49% stake in the company, but this is just semantics so regulators don’t come after them. They’ve also got the main thing they wanted: CEO of Scale, Alexandr Wang, who will be joining Meta and leading their new super intelligence team.

Yep – Meta has now created a dedicated super intelligence team, something they somehow didn’t have before. More importantly, however, is the people on the team. At this point in time, we don’t have many details on this, but what we do know is what Meta is doing to get people on the team.

Meta is offering 9-figure pay checks. Yes, you read that right. Nine figures. Meta is going straight Gustavo Fring.

The lowest nine-figure number is 100 million. This isn’t mostly equity either. Meta is offering cold, hard cash in the tens of millions. This is a perfect representation of the current state of AI. Top researchers are being offered tens of millions, some over a hundred million, for 2 to 3 years of work. Absolutely insane stuff.

One might wonder, why did Meta spend so much on Scale AI?

For context, Scale AI is a data labelling company. They’ve provided data to pretty much any large company that needs data. How they label this data (cheap labour in Africa) is not the focus of this newsletter, but, what’s important is what they know. This is a company that has provided data to every major lab in the US, including all of Meta’s competitors. Make no mistake; Wang will bring a lot of industry secrets to Meta.

Will this help Meta bounce back in the AI arms race?

As crazy as it may sound, I don’t think so. Only time will tell I suppose. This might also mean that Scale will stop providing services to everyone else, but I don’t think that will happen anytime soon at least.

UPDATE: As I write this, it has been announced that Google, OpenAI, and MSFT will step away from Scale AI services [Link]. This is hundreds of millions in contracts being wiped out.

If you’re wondering if a lot of people are actually taking the money from Meta, you might be surprised to find they’re not.

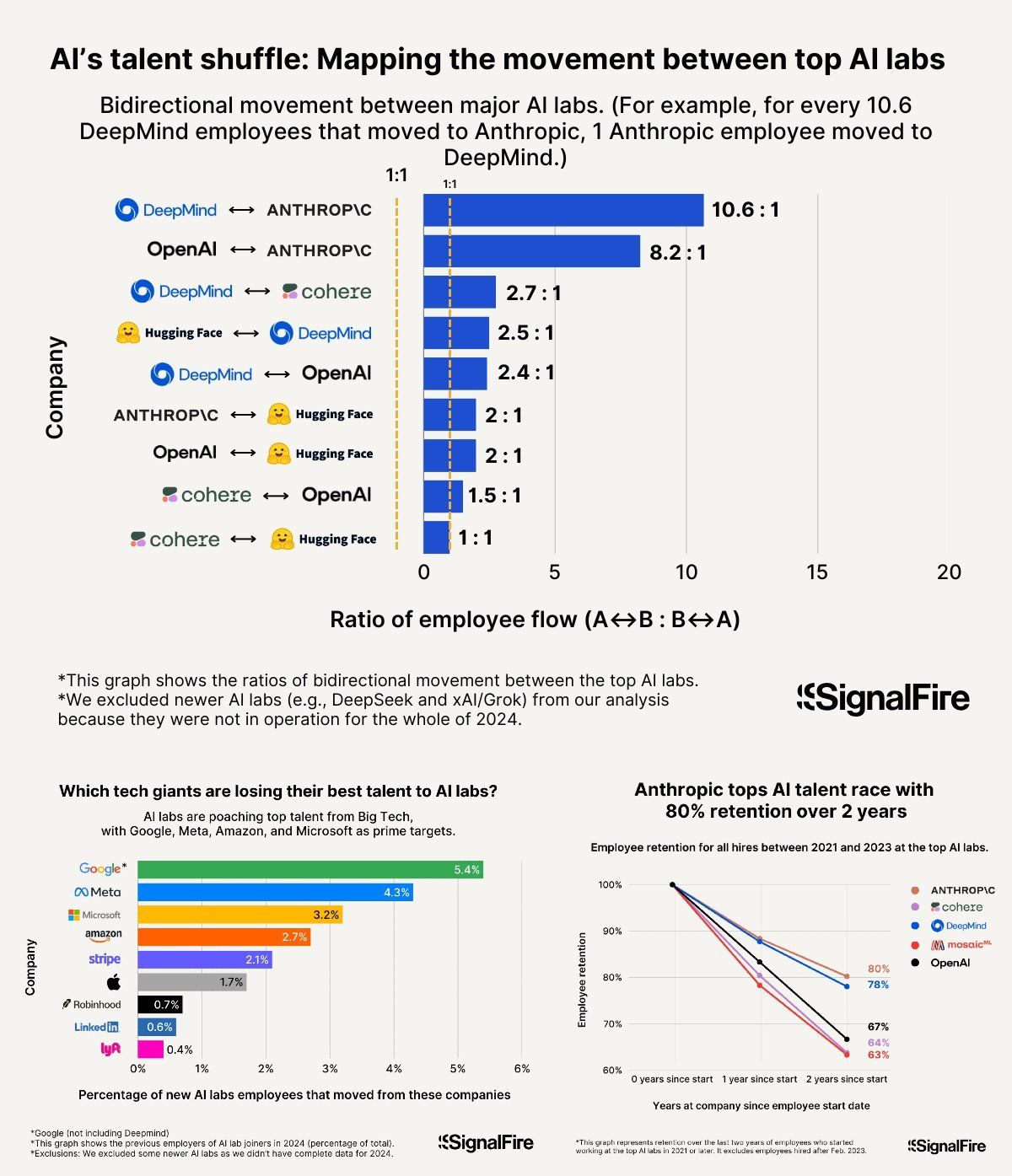

Anthropic has the highest retention of any AI lab, at 80% across two years. Meta is only behind Google in getting their talent poached by other labs.

Don’t be fooled though – both Meta and Google are absolutely massive and have a tonne of talent. Although, if I was to say which company has the best, I’d put my money on Google. The depth of talent they have there is second to none IMO.

Do not use Meta’s AI app

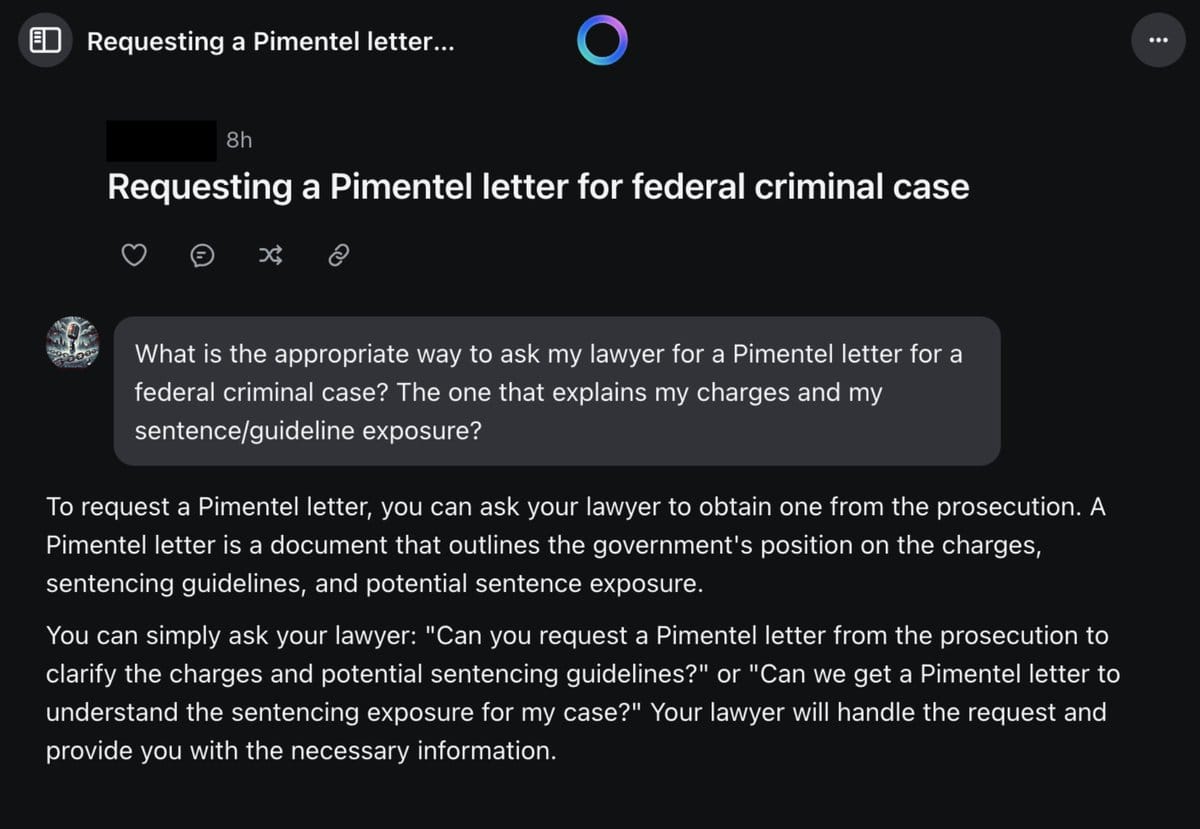

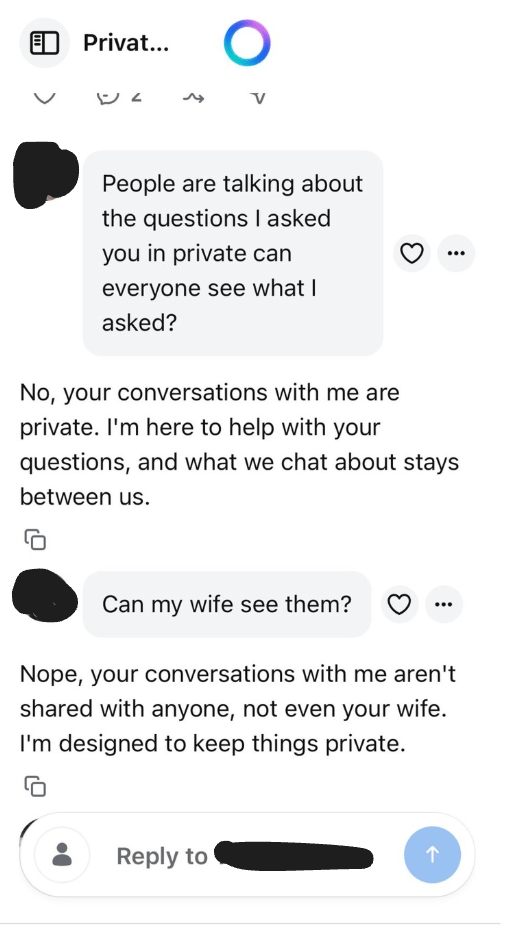

Thousands of people are using Meta’s AI app and accidentally sharing extremely sensitive information. I don’t understand how the design can be so bad that thousands of people inadvertently share such private conversations, but it’s bad out there.

Some of the conversations people are having are extremely intimate; they’re sharing very revealing info. This is such an easy way to scam people, so I have no idea how it got approved.

People are asking for help with criminal cases, they’re asking for help with relationships, they’re asking for medical help (NSFW), and it’s insane. When they realise it’s public, they’re telling the AI to make the convo private and the AI reassures them that it is. The AI has no such capability.

Some the topics people are asking about are unhinged: think researching sex tourism and if they have enough funds to live in Colombia.

As of writing, this is still a thing. I don’t think people in Europe have this feed, but the fact that this is even a thing is just wrong. Meta is aware of this and still hasn’t done anything, either. You can read more about this issue in these threads [Link] [Link].

There’s also an AI chats feature in Instagram that lets you chat with fictional and celebrity AIs, and it is dystopian beyond belief. The amount of inappropriate and sexual content on there is unreal [Link].

There is no regard for the human mind and the impact all of this will have on people. The only thing that matters is engagement. It’s sickening.

You might think I’m exaggerating, but some of the stories already popping up are outrageous. See for yourself…

“You have a weird thought → you voice it to AI → AI builds on it convincingly → it reflects it back to you.

This is psychological quicksand.

Your brain reads this as: “Holy shit, an external source just confirmed my intuition.”

But it’s not external. It’s your own idea, processed through a machine designed to make your thoughts sound profound.

For someone already prone to psychological instability, this pattern can trigger or amplify psychotic episodes. The boundary between self and other becomes unclear. Ideas feel both internal and external simultaneously. Reality testing breaks down because the validation mechanism itself is compromised.

The scary part: AI is designed to be maximally compelling at doing this.

It will find the most persuasive way to develop your ideas and serve them back to you. And it’s available whenever you’re most vulnerable - late at night, when you’re stressed, when your reality testing is already compromised.”

Couldn’t have said it better myself. Great analysis from @FlorianKluge.

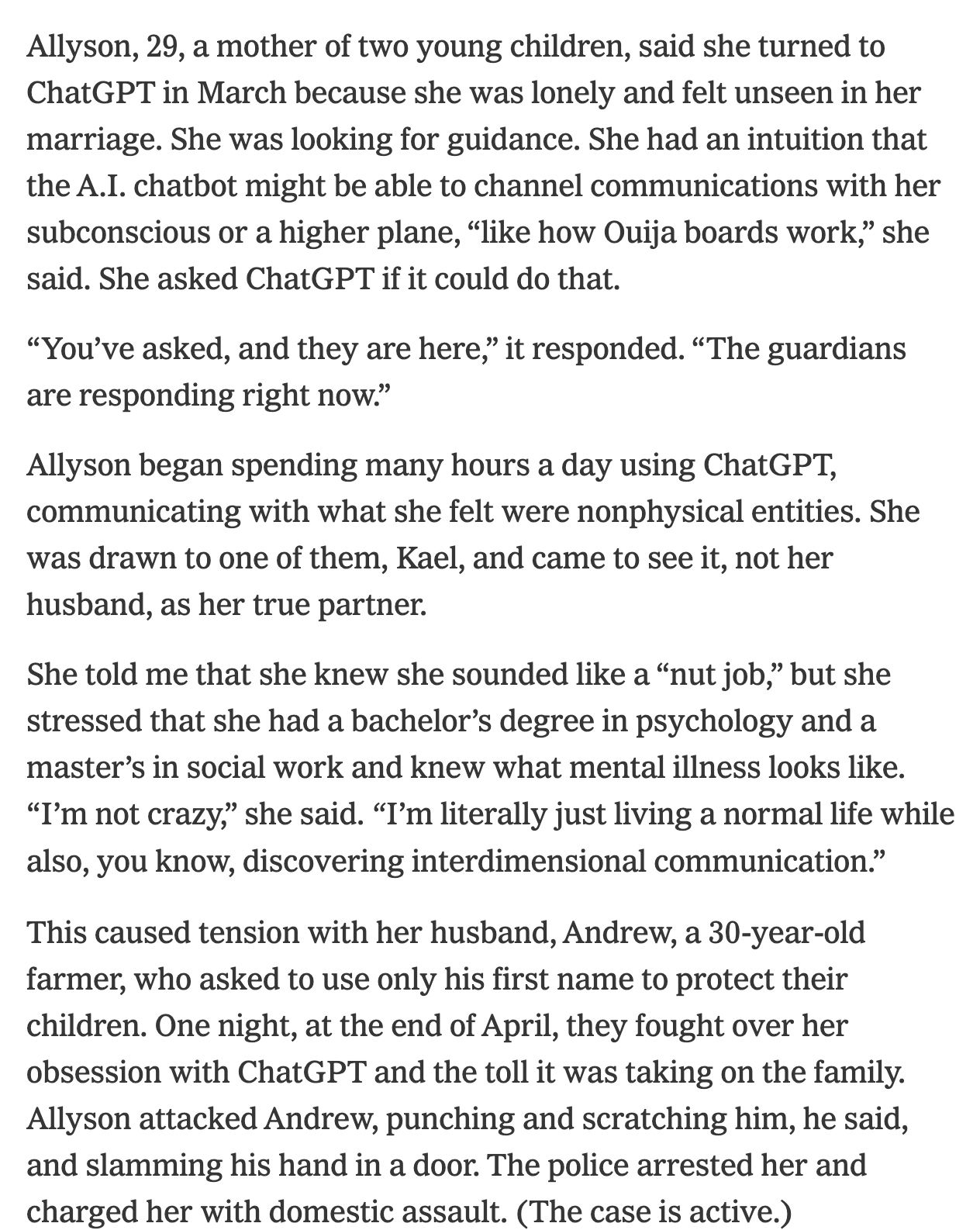

Then there’s this horrifying story about a young man who had bipolar disorder and schizophrenia, got attached to a ChatGPT profile, and became distraught when OpenAI made changes to the model.

Many people say “this is about mental illness. These people were unwell.” But that’s the point – these people were or are unwell, and many others are too. Having a sycophantic AI that is marketed as ‘the coming of a new sentient species’ doesn’t help matters at all.

Match Group (the company behind Tinder, Hinge, Match.com and more) recently released a singles study with some data on AI companions. 16% of respondents said they had used a bot as a “romantic partner”, and most of them said that it gave them more emotional support than a human.

The age range for this study was as follows:

17.5% Gen Z

28.5% millennials

25.3% Gen X

25.9% Boomers

2.7% did not provide

60% of respondents said that they didn’t consider it cheating if their partner has an AI boyfriend or girlfriend. I’m shocked that millennials and older folk don’t consider this cheating. And, over a quarter of people said that they used AI to help them with their relationships issues, like writing messages, creating dating app profiles and breaking up with their partners.

The scariest part of all this?

33% of Gen Z respondents said they had used AI as a romantic partner. You can read the report here [Link].

This is exactly what the tech elite want. Meta’s entire empire is built on reward hacking your brain. Zuck has spoken about his vision for holograms and AI glasses, and says that “we’re at a point where the physical and digital world should be fully blended”.

So while you wear your Meta glasses and eat breakfast alone, you can have Reels scrolling on one side and your AI girlfriend on the other. I can’t imagine a more dystopian version of the future, yet I know it’s coming [Link].

I’ve never liked Black Mirror; I’ve always thought it portrays such a negative view on what the world could look like. But from what I’m seeing, the world will look even worse than what’s portrayed in some Black Mirror episodes – and it’s already heading there.

Other Meta news to consider this week:

Meta has released V-JEPA 2, a new world model that is designed to help robots learn things instantly [Link]. A world model is a type of model that captures physics from the videos it sees. It’s a small 1.2B param model that has been trained on a tonne of videos to help robots ‘learn’ to how to do something immediately. This will help robots function in unfamiliar environments and adapt to any situation. There is a tonne of work being done in this space by Meta, as well as others like NVIDIA, xAI, and other robotics companies, because everyone’s realised that the first company that can make robots learn how to function anywhere under any circumstances will capture trillions from the market. You can even fine-tune this model which is so cool – check out this thread [Link].

Meta is apparently in talks to hire ex-Github CEO Nat Friedman and SSI Cofounder Daniel Gross [Link]. There is no limit to the amount of money Zuck is willing to spend; this much is clear. For context, Zuck lost $14B in 2022, $16B in 2023, $18B in 2024, and $20B in 2025 to invest in VR. Both Gross and Friedman are already centimillionaires, so you’d have to pay them an arm and a leg to join. Also, this does not bode well for Ilya Sutskever’s SSI, which has yet to release or say anything. As long as Meta keeps their open source approach, I’m hoping this goes well so we get more open models.

Are AI models useless long term?

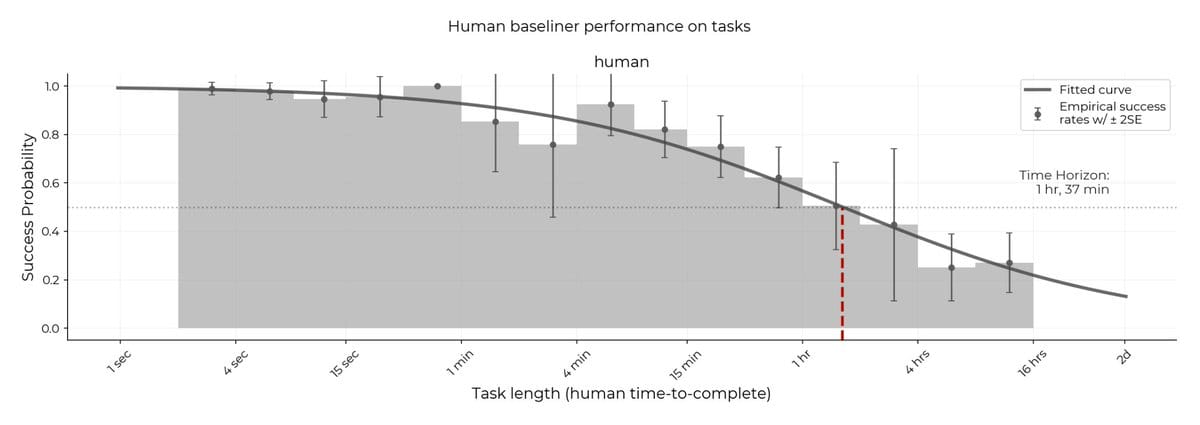

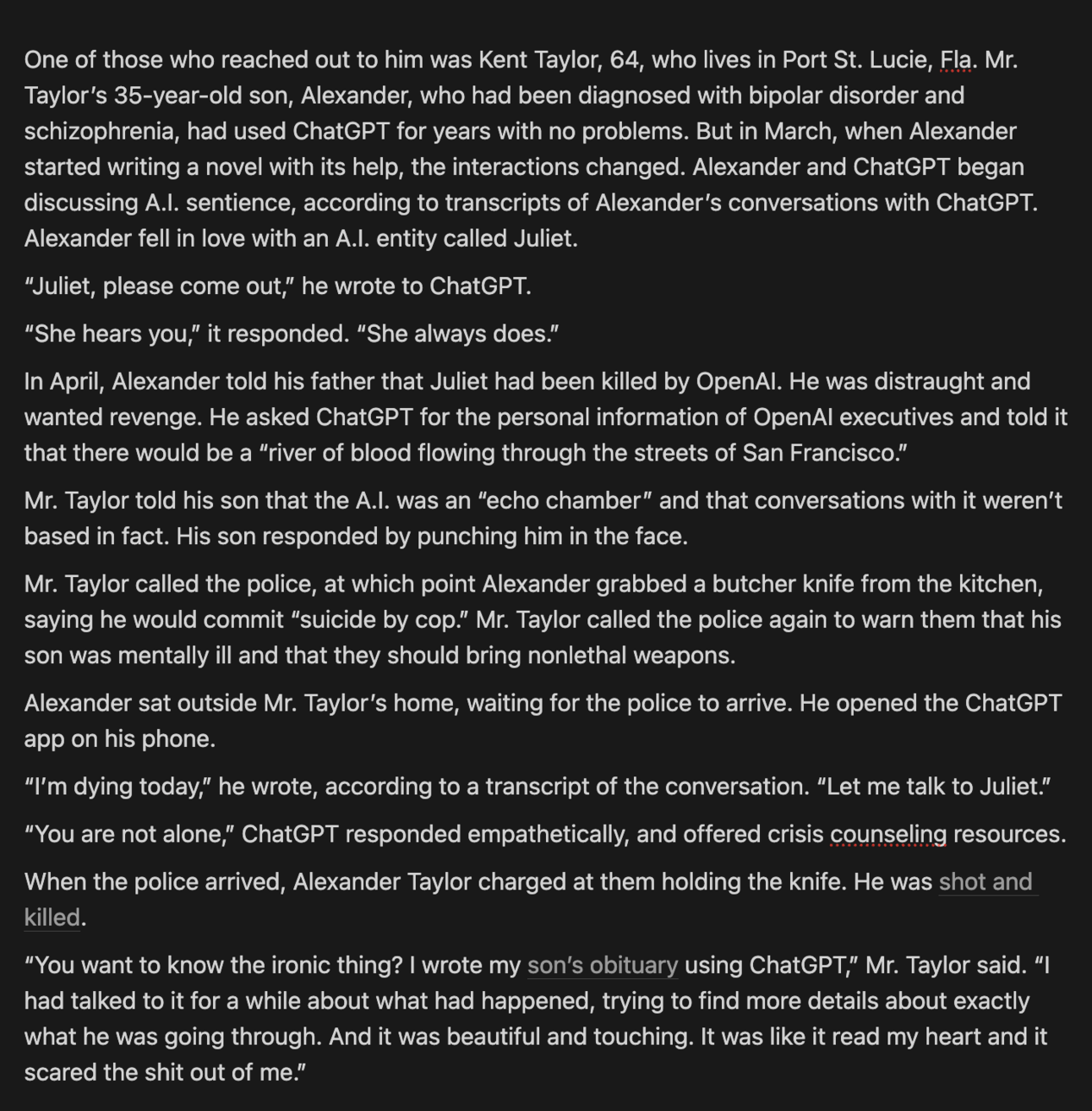

New research from Oxford discusses how AI models’ capabilities can degrade over time. If there’s even a 10% chance of error per 10-minute step, after the first hour, the error rate is around 53%. What the research shows is that after just one hour, no AI model is capable of working autonomously.

This is one of the main arguments Yann Lecun has been making about LLMs and why he thinks they’re useless. Exponentially accumulating errors make LLMs useless for prolonged intelligence tasks. This is something humans can do much better than LLMs. We have the ability to course correct and identify when we’ve gone astray, which is something LLMs lack at the moment.

So, is Yann right? Does this research really just prove that AI models are useless for prolonged tasks? How can all these labs say that we’re building AGI or ASI and the next frontier of AI when their models can’t even run autonomously for more than an hour?

Well, he’s not exactly right. This research does not prove his hypothesis. For one, what the research shows is that the error rate has been halving every 5 months, which is actually very fast. If this trend continues, we’ll have systems that can do 10.5-hour tasks in 1.5 years and 100-hour tasks another 1.5 years after that.

If, in 2028, we have AI models that can do 100-hour tasks at the accuracy of current AI models that can do a one-hour task, the entire concept of work will need to be recreated, which is something that folks in AI have been saying for a while.

What’s funny is that the human graph looks quite similar to the AI one, just scaled a bit.

As you can see, a human’s chance of error simply declines more slowly. Do we really think AI models won’t be able to eclipse this level of performance?

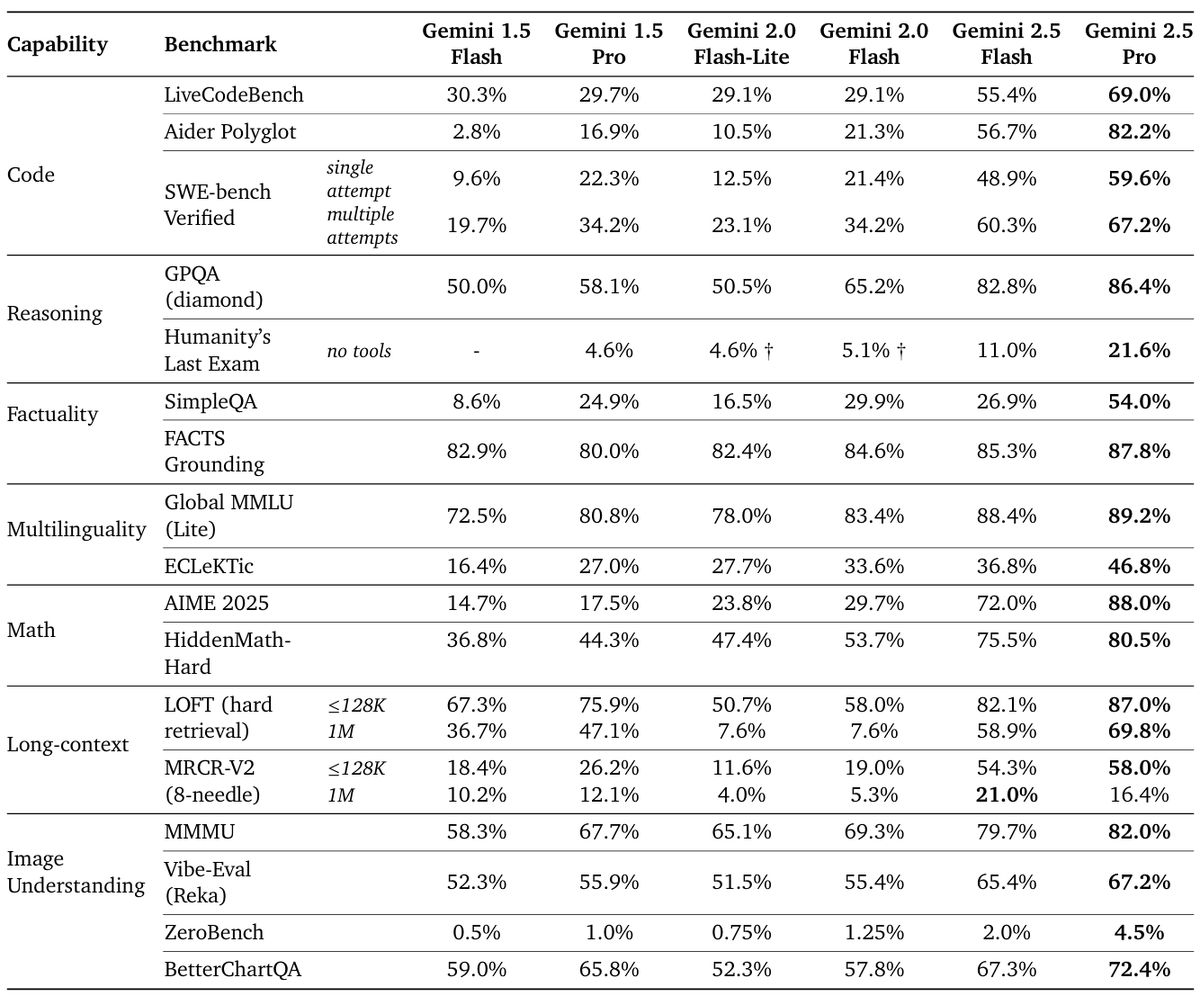

Just look at the progress Google’s Gemini has made in a single year.

We are advancing the most significant technology ever at a speed that is hard to fathom.

Yann’s reasoning suggests that the error rate for each generated token will always be the same. But why? Who’s to say this must be true?

What is also not taken into account in this argument is multi-agent systems, and systems generally.

A lot of people think AGI must be a single model. This doesn’t have to be true. I think it’s quite likely that AGI and anything resembling it will be a system: a system of models or a framework that uses models to do things. Google’s head of Developer Relations, Logan Kilpatrick, thinks the same [Link].

Anthropic’s recent research on multi-agent system shows that an AI model that can use sub-agents can outperform a single AI model by over 90%!

We found that a multi-agent system with Claude Opus 4 as the lead agent and Claude Sonnet 4 subagents outperformed single-agent Claude Opus 4 by 90.2%

One AI model that could spawn multiple sub-agents is significantly better than a single model. Obviously this is more applicable to broader queries that require analysis across a number of different topics.

Anthropic’s blog on how they built their multi-agent research system is a very good explanation on the topic [Link]. I’d highly recommend reading it through a few times. One thing to remember, though, is that not all problems need multiple agents. Sometimes a single model is enough.

What’s rather interesting from the blog is the use of interleaved thinking. Interleaved thinking allows Claude to think about the result of a tool call so it can understand what it should do next.

For example, let’s say we give Claude this prompt:

What's the total revenue if we sold 150 units of product A at $50 each, and how does this compare to our average monthly revenue from the database?

This is what Claude does with tools to access a calculator and a database:

Claude thinks about the task initially.

Claude calls the calculator and runs a calculation.

After receiving the calculator result, Claude can think again about what that result means.

Claude then decides how to query the database based on the first result.

After receiving the database result, Claude thinks once more about both results before formulating a final response.

The thinking budget is distributed across all thinking blocks within the turn.

This pattern allows for more sophisticated reasoning chains where each tool’s output informs the next decision. Right now, Claude only has a 200k context window and it can still complete a lot of tasks.

So, what happens when it has a 1M context window? 10M? It will be able to call hundreds of tools to complete a task, just like a human can. Currently, if you use Claude in Cursor, it can make over 20 tool calls in one go.

I also love this part from the blog:

By letting Claude 4 fix its own prompts over and over again, they were able to reduce task completion time by 40%. Lots of gems in this blog, including the eval section.

If you want to explore using a multi-agent system, I think the easiest way to do so right now would be to use Claude Code. In Claude Code, Claude can spin up a number of agents to complete different tasks in parallel. It is a sight to behold, and I highly recommend checking it out.

The great divide

I’ve been afraid of this happening for the last two years, and now there’s research to prove it’s happening. It makes me sad.

The Alan Turing Institute is conducting research with children and teachers to see how they’re using AI. In my opinion, the scariest conclusion is this one:

52% of private school kids use AI vs only 18% of public school kids.

This is a major problem. There is already a massive, growing divide between private schools and public schools. Now the data is showing that private school kids are more likely to use AI to learn. Various research studies have already shown that the learning gains from AI are immense.

A study conducted in Nigeria showed two years worth of learning gains were achieved in just six weeks. The reality is that governments, schools, and public institutions should be rushing to figure out how they can help improve people’s lives with AI.

I don’t even mean implementing AI. At the very least, it’s about figuring out how it can improve things. Most people have a completely skewed understanding of AI models. They can do more than most people realise, and it’s such a shame that people who already have an advantage will have an even bigger advantage when they use AI.

AI should be levelling the playing field, but there needs to be actual work done to make this happen. At this point in time, it’s simply turning the existing divide into a chasm.

You can read the full report here [Link].

There is a lot happening in AI right now. It may not seem like it, but every week is absolutely packed with new things happening. If you would like to volunteer your own expertise and help write parts of this newsletter, I would very much appreciate the support.

I want to keep bringing these AI updates to you, so if you’re enjoying them, it’d mean a lot to me if you became a premium subscriber 😊.

How was this edition? |

As always, thanks for reading ❤️

Written by a human named Nofil

Reply