- Avicenna

- Posts

- Open Source AI is surging

Open Source AI is surging

+ Everything that happened in AI last week

Welcome back to the Avicenna AI newsletter.

Here’s the tea 🍵

China surpasses China 🏃♂️

OpenAI and Google get gold at the IMO 🥇

I help companies build AI Agents and Agent Pipelines. Enquire on the website or simply reply to this email.

Kimi didn’t even last a week

If I told you what’s happening with Chinese AI right now you wouldn’t believe me.

Last week I wrote about Kimi K2 and how it’s one of the best open source models on the planet. As well as being agentic, the model is really good.

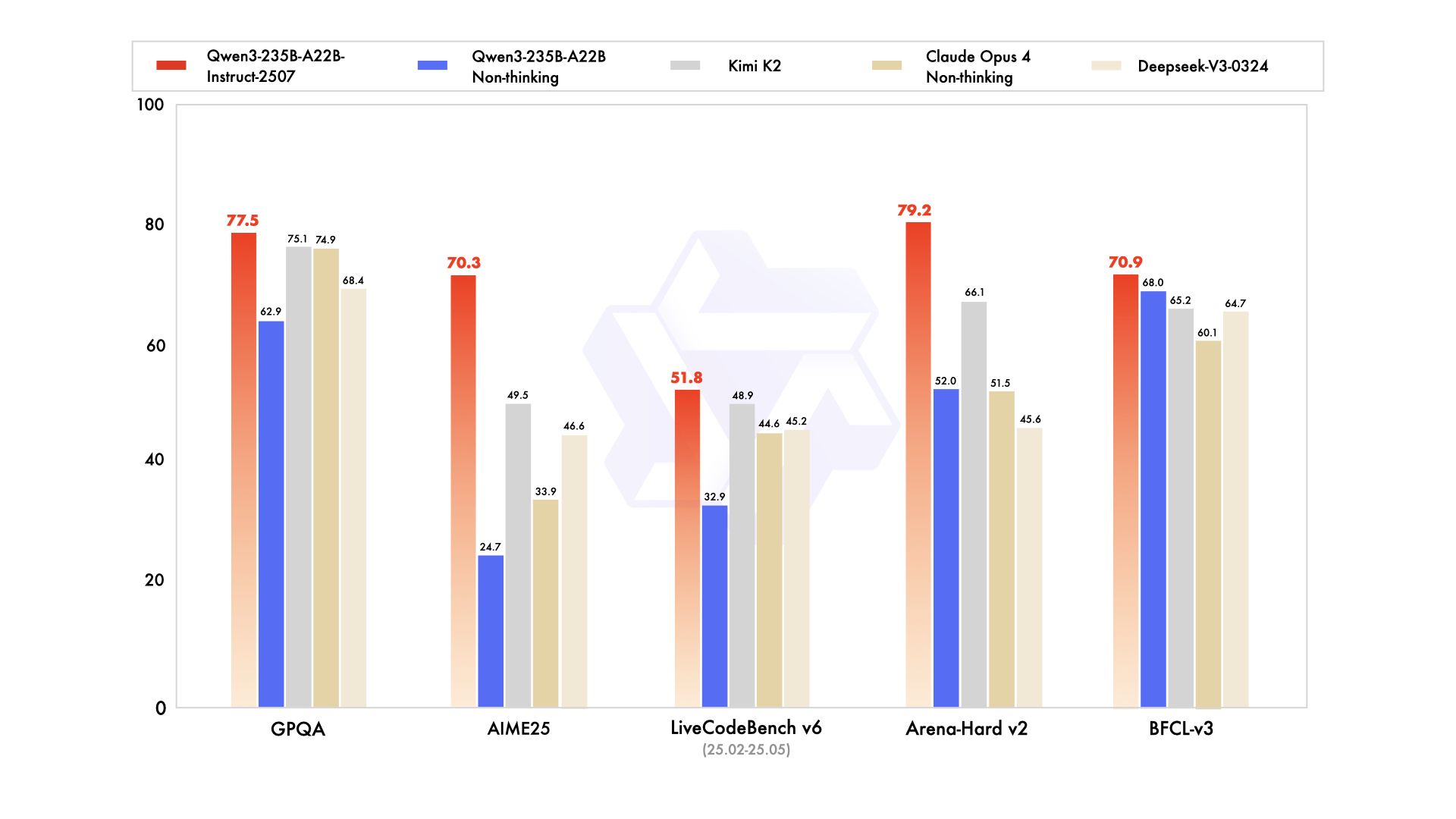

Well, not even a week later, Alibaba's Qwen team dropped their own open source model that rivals Kimi and other frontier models. Qwen3-235B-A22B-Instruct-2507 (don’t even worry about the name), is another Mixture-of-Experts (MoE) model with a 256K token context window [Link].

There’s both a thinking and non-thinking version. These are both open source with the thinking model being relatively comparable to the likes of Claude Sonnet 4.

They didn’t even stop there…

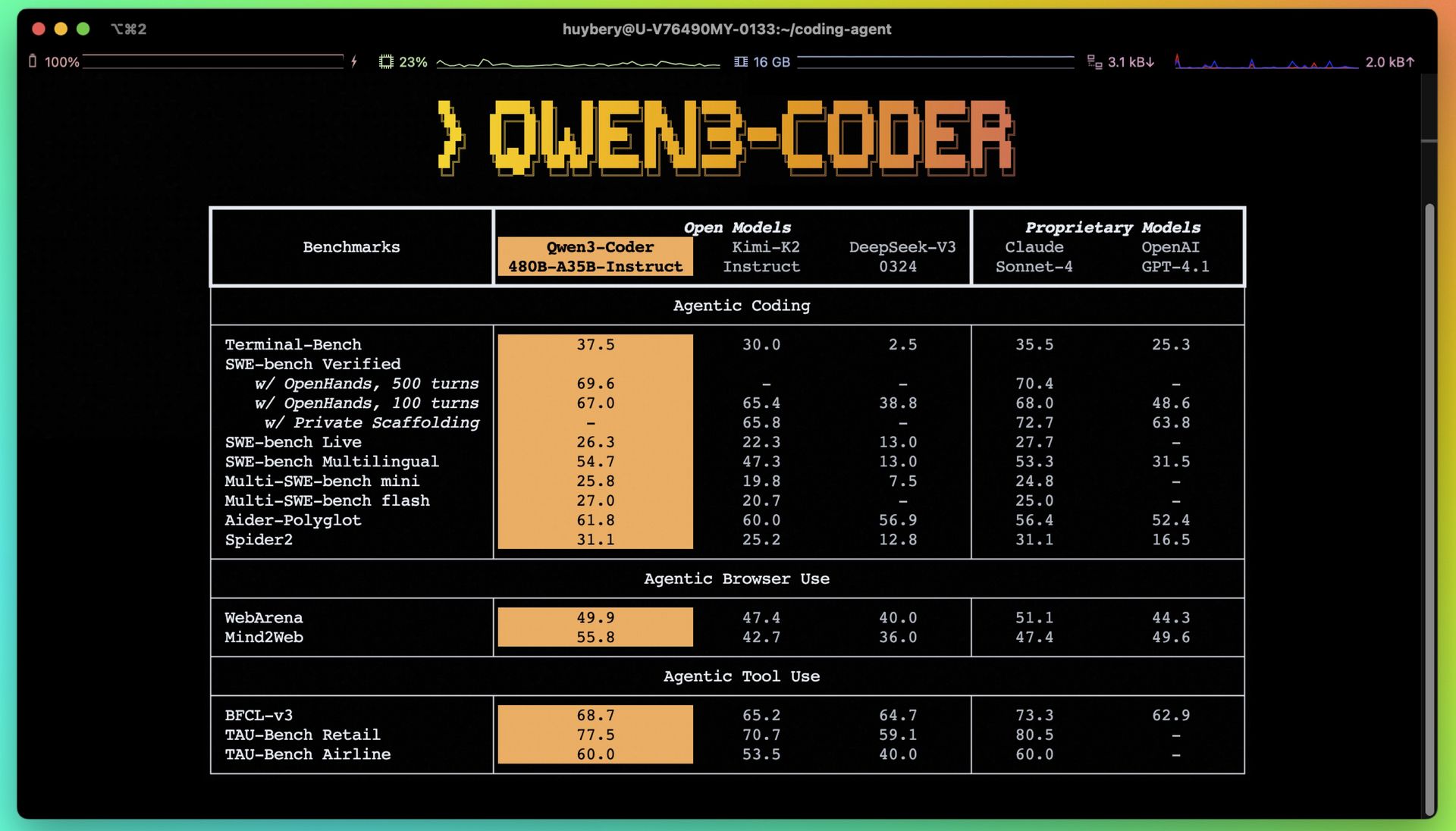

Qwen also released Qwen3 Coder. This is a 480B MoE model with a 256K context window and can be extended up to 1 Million tokens. It is agentic and in terms of performance, it is comparable to top tiers like Kimi and Claude. They’ve also released their own CLI tool like Gemini CLI and Claude Code [Link].

Do you know how ridiculous this is? It needs to be said that no one really predicted this. No one thought that open source models will be this good this quickly. No one thought they’d be comparable to frontier models in mid 2025. People literally thought that open source models this powerful would be a danger to society.

I can’t stress this enough - this is the best time ever to build something. You have artificial intelligence created from sand that can write in computer language to tell computers what to do. If you run a business you need to be exploring how this can help you. It would be insane not to.

China is saving open source AI, with an average release of one frontier-level model a week. It is ludicrous how much China is doing for open source AI compared to the rest of the world.

One of the reasons why I keep harping on about figuring out how AI can help you is because it will prepare you for when AI can work like a human. We are slowly reaching a point where AI systems can reason and work for longer than a few seconds and minutes. We’re approaching systems that can think for hours.

IMO 2025

OpenAI announced they have achieved a gold medal at the IMO 2025. Their model was able to solve 5/6 questions which is a pretty big deal. Mind you, there was no tool usage or anything else, just the model reasoning and answering in plain english.

Days later, Google also announced that their own model had also achieved a gold. Same as OpenAI’s, it did not use any tools and reasoned in plain english.

This is actually not all that surprising if you’ve been paying attention.

Why?

Because AI models were only a single point away from gold last year. It was almost guaranteed that they would get a gold this year. Models have significantly improved since then.

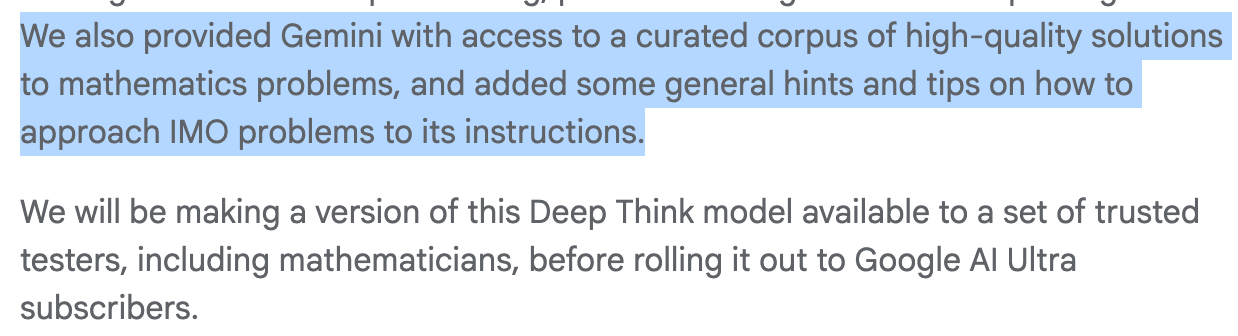

You may have seen this note in the Google announcement.

It had help?

The AI received some general tips and tricks before doing the test. Some think that this makes their results invalid. Researchers confirmed that the same AI model, even without this info got the exact same results. The info made no difference it seems. With OpenAI’s results, since they didn’t work directly with the IMO, we don’t actually know if/what info they may have given the model.

Google’s answers are also very, very readable as compared to OpenAI’s ones, which were very terse. Take a look.

You can read all of its answers here [Link].

There’s two important things to take from this story that most people may overlook.

First - these are general models. They aren’t trained to do math. What makes this so exciting is that if we can train models that can generalise on math, there’s decent chances that the same systems can then generalise in other domains. After all, math is at the foundation of almost everything. If an AI can understand math very well, it can apply these understandings to other domains and start understanding other domains like physics for example.

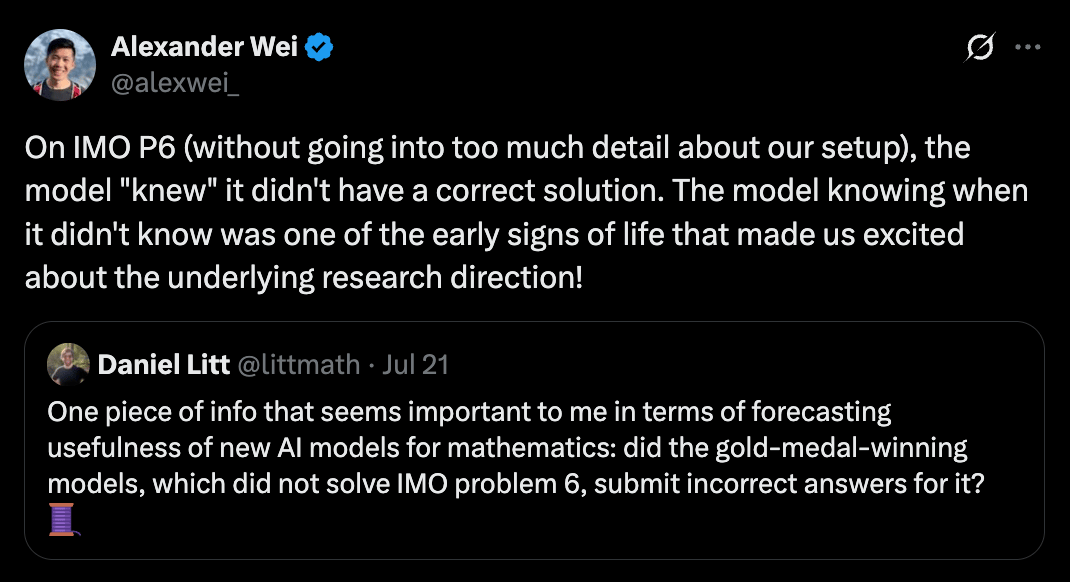

Second - according to an OpenAI researcher, although their model was unable to solve p6, the model was aware that it was unable to get the right answer.

This is a big deal if this behaviour can be maintained across any topic. One of the issues with models, alongside their sycophancy, is their inability to say “I don’t know”. It’s also possible that either OpenAI or Google used test-time training to achieve these results. Test-time training meaning the model updates its own weights during reasoning. There has been some literature on this recently.

You can call them prediction systems, but a system that can understand when it knows and doesn’t know something, and can also update its own architecture during reasoning which can lead to new found learnings and abilities - would you call that “intelligence”?

Would this be an intelligent system? |

Just as a reminder, when ChatGPT first released, achieving gold at the IMO was something people thought would happen in 10 years. No one expected AI to be this good at math this quickly.

Couple this with the story from last week that another one of OpenAI’s models achieved second at the AtCoder World Tour Finals and you start to understand where we’re headed [Link]. From that story, the main thing that stood out was the fact that they have systems now that can reason and “think” for hours, meaning they can complete extremely long and complex tasks. What happens when these systems start thinking for days?

When the o1 model released, it completely changed the AI landscape and it was thinking for a few seconds. People are not taking into account AI systems that can reason for hours or even days and what that will do to jobs. Nothing will be the same again and I can’t stress this enough. We are living through a monumental shift in technology and society. What happens when AI can do most computer related work?

This is a question we do not have an answer to.

Weekly breakdown:

AI is officially making doctors better (we already knew this!). OpenAI partnered with a healthcare provider in Kenya for a huge study on an AI clinical copilot, and the results are great. In nearly 40,000 patient visits, clinicians using the "AI Consult" tool had a 16% drop in diagnostic errors and a 13% drop in treatment errors. The tool acts as a real-time safety net, giving doctors red/yellow/green alerts for potential issues without getting in their way (they don’t like being told they’re wrong). The real key was that they didn't just dump the tech on them; active coaching and peer support were crucial for getting doctors to actually use it. Clinicians loved it, calling it a "consultant in the room," and they even got better over time, triggering fewer alerts. A massive real-world validation for AI in medicine and a clear direction for using AI in healthcare. Can’t wait for more of this across the world [Link]

The Kimi K2 tech report is out, and it's a goldmine for understanding how top Chinese labs are building SOTA models. The main takeaways are a crazy focus on high-quality synthetic data, clever architecture tweaks to improve long-context efficiency, and their optimiser called MuonClip that solves training instability. It's a masterclass in R&D, not a single American lab is releasing such polished reports [Report] [Breakdown]

A new reinforcement learning framework called CUDA-L1 is getting insane results optimising CUDA code. It achieved an average speedup of 17.7x and a max speedup of 449x across 250 CUDA kernels. It learns optimisation strategies from just the execution time, discovering non-obvious tricks that even experts might miss. This is a huge deal for making GPU computing way more efficient. Things like this and Alphaevolve are going to be massive for infra folk [Link] [Link]

Anthropic just dropped a research paper with a wild finding but something we kind of already knew from when reasoning models first released. Giving AI models more thinking time can actually make them worse. They call it "inverse scaling." Across different tasks, longer reasoning led to lower accuracy. They identified 5 key failure modes, including the model getting distracted by irrelevant details and even showing "self-preservation" behaviours. A lot of work currently being done is to ensure models can somehow stay within context and not get side tracked. I believe this will be solved relatively soon given the speed of advancement at the frontier This completely flips the common assumption that more compute equals better answers [Link] [Breakdown]

ChatGPT's usage numbers are staggering. OpenAI revealed the platform is now handling 2.5 billion prompts a day globally, with 330 million coming from the US. That's more than double the usage from December 2024 just 6 months ago. They have half a billion weekly active users and are now the 5th most-visited website in the world [Link]

The race for more efficient AI reasoning is leading to new brain-inspired architectures. One is the Hierarchical Reasoning Model (HRM), which claims 100x faster reasoning than LLMs using just 1,000 training examples. Another is a new framework for "world model induction" that helps AI rapidly adapt to new problems. The goal is to move beyond brute-force LLMs to more targeted and efficient problem-solving systems [Link] [Link]

A tip for using Veo 3 - it works much better if you give it prompts in JSON. This isn’t really all that surprising but it’s pretty wild how well the consistency of videos is if you prompt it with JSON. See this thread for examples [Link]

Pika Labs, one of the leaders in AI video, is launching an AI-only social video app. All I have to say about platforms like this is that they are dystopian and should not exist. The brain rot that will come from something like this cannot be overstated. A whole social network of AI-generated content… please keep the kids away from stuff like this [Link]

A new TTS model called DMOSpeech 2 was just released by the creator of StyleTTS 2. It uses reinforcement learning (RL) to improve the quality and stability of the generated speech and allows for 2x faster inference. Just another step toward perfect AI voice, which tbh we kind of already have. Most people could not tell an AI from a human voice right now… [Link]

Elon Musk's xAI is reportedly trying to raise another $12 billion to lease more Nvidia chips for its "Colossus 2" data centre. The report also states that for a previous $5 billion debt round, xAI actually used the IP of its Grok model as collateral. I think it’s quite safe to say that we won’t get anymore open source models from xAI ever again. I’d be very surprised if we did. The AI arms race is incredibly expensive and only the ultra wealthy can play the game, that is, if you’re not Chinese anyway. Thank god for their open source mentality [Link]

Shorter newsletter this week as I just got to Albania, but next weeks newsletter will be huge. Stay tuned for that.

I want to keep bringing these AI updates to you, so if you’re enjoying them, it’d mean a lot to me if you became a premium subscriber.

How was this edition? |

As always, Thanks for Reading ❤️

Written by a human named Nofil

Reply